What is Reproducible Research?

Last updated on 2025-05-09 | Edit this page

For our workshop today….

For our workshop today, as the training was designed to be relevant to all disciplines, we are using the UKRN’s broad definition of reproducibility:

“Research that is sufficiently transparent that someone with the relevant expertise can clearly follow, as relevant for different types of research:

how it was done;

why it was done in that way;

the evidence that it established;

the reasoning and/or judgements that were used; and

how all of that led justifiably to the research findings and conclusions.”

In most discussions of reproducible research, especially in STEM disciplines, reproducible research is the concept that someone can, given your data and methods, redo your research and come to the same conclusion.

We expect that anyone conducting the same research should always come to the same conclusion. This enables us to trust that the knowledge derived from this research can be accepted as fact.

Replicability and Repeatability

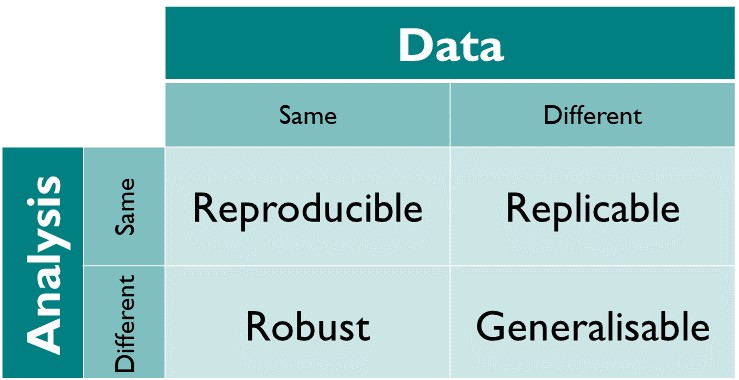

There are a number of terms you may hear when we talk about reproducible. Replicable and Repeatable are two common terms that may arise.

The Turing Way offers the following descriptions:

How did we get here?

“It can be proven that most claimed research findings are false.” (Ioannidis, 2005)

In 2010…

- Daryl Bem publishes a paper in a very reputable journal “Journal of

Personality and Social Psychology’ providing evidence for precognition

(people are psychic!)

- Investigated well-established effects in psychology, and time-reversed them (outcome/effect precedes the cause)

- Problem: Precognition is outside of the realm of reality, especially the consistent results of finding evidence for precognition across 9 consecutive studies

- Researchers respond to Bem via replication

- Galak (2012) found no effect of precognition across 7 studies (N = 3289)

- Ritchie et al., 2012 also failed to replicate these results in three studies, but when they submitted their paper to the same journal that accepted Bem’s research, their paper was rejected because they “do not publish straight replications”

In 2011…

- Simmons (2011) publishes a paper on flexibility in design, analysis and reporting that affects significance of your results

- They mention various, common “Questionable Research Practices

(QRPs)” that inflate false positives

- Example: Researcher is interested in the relationship between

listening to music and memory performance

- Control Group 1: No music, matched task e.g. colouring

- Experimental Groups: Pop, Rock, Classical, R&B, Hip-hop, Jazz, Country, Blues

- Only find a significant relationship between listening to rock music and memory performance

- QRP 1: Researcher writes up the results, ignoring all the other conditions and concludes that music affects memory

- QRP 2: Researcher changes their predictions, initially thought music would improve memory, but finds opposite results

- Example: Researcher is interested in the relationship between

listening to music and memory performance

“If there are participants you don’t like, or trials, observers, or interviewers who gave you anomalous results, drop them […]. Go on a fishing expedition for something—anything—interesting.” (Bem, 2003)

2012… How common are QRPs?

- John et al. (2012) asked ~2000 researchers, (1) how many QRPs they

engage in, and (2) how many QRPs their colleagues engage in

- ~60% admitted to not reporting all of their dependent variables (outcome variables)

- ~40% admitted to only reporting experiments that had worked

- ~50% admitted to engaging in optional stopping (collecting more data if you don’t find statistically significant results)

- These were research norms at the time

Since then…

- Various efforts across disciplines to replicate studies

The Reproducibility Project: Cancer Biology

- 8-year effort to replicate experiments from high-impact cancer biology papers published between 2010 and 2012. The project was a collaboration between the Center of Open Science and Science Exchange.

When preparing replications of 193 experiments from 53 papers there were a number of challenges that affected the project. - 2% of experiments had open data - 0% of protocols completely described - 32% of experiments, the original authors were not helpful or unresponsive - 41% of experiments, the original authors were very helpful

Let’s talk about this: On unresponsive authors, it’s not always malicious: - Authors do move institutes - Can drop out of academia - May be research students who didn’t continue with research - Data may have left with research students and supervisor may lack details or data on how the experiment was done

The bottom line…

- Replication issues aren’t always products of malicious intent

- honest errors happen… a lot!

- (Although, check out Data Colada’s blog posts on Harvard University professor researching honesty, who was caught for falsifying their data)

Is there really a crisis?

The Reproducibility Crisis

Nature paper - 1,500 scientists lift the lid on reproducibility

In the Nature paper “1,500 scientists lift the lid on reproducibility”, researchers were surveyed about reproducibility.

Over 70% of researchers who had tried to replicate another researcher’s experiments failed.

Over half had failed to reproduce their own experiments.

These are not insignificant numbers.

A number of interesting insights came from this study:

Half of researchers surveyed agreed that there was a significant crisis of reproducibility

almost 70% still trusted that even though these papers couldn’t be reproduced, that the results were probably correct

Let’s look now on why this is important.

References

Reference: Definitions — The Turing Way Community. (2022). The Turing Way: A handbook for reproducible, ethical and collaborative research (Version 1.1.0) [Computer software]. https://doi.org/10.5281/zenodo.3233853 licenced as CC-BY

The Turing Way Community. This illustration is created by Scriberia with The Turing Way community, used under a CC-BY 4.0 licence. DOI: https://doi.org/10.5281/zenodo.3332807

Key Points

In this lesson, we have learnt:

What is reproducible research?

The different terms around reproducibility